27. Large Language Models#

27.1. Pre-Reading#

YouTube (0:00 - 8:35) Transformers, explained: Understand the model behind GPT, BERT, and T5

YouTube (0:00 - 3:03) Transformers (how LLMs work) explained visually -> watch more if you really want to see what’s going on!

Objectives#

Understand why transformer architecture and GPUs make LLMs possible

Describe how LLMs exhibit emergent behavior.

Discuss ethical considerations with LLMs.

27.2. Transformers#

27.3. Emergence#

Emergence is when you combine relatively simple sets of rules and at some threshold a mind-blowing result manifests.

This is one of my all time favorite books.

Emergence is the the opposite of reduction. The latter tries to move from the whole to the parts. It has been enormously successful. The former tries to generate the properties of the whole from an understanding of the parts. Both approaches can he mutually self-consistent.

We take two simple objects, the force of gravity, and the ability to measure the fourth dimension emerges.

LLMs as an Emergent Phenomena#

LLM Steps:

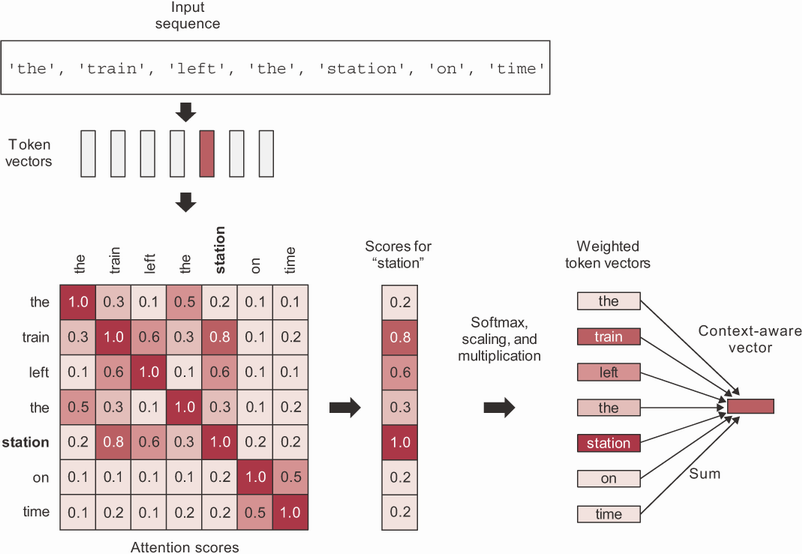

Use self-attention to compute relevancy between each word and every other word in the sentence.

Fig. 27.1 Self-attention for station in the sentence “The train left the station on time.” ~ Deep Learning with Python, 2nd Ed., Fig. 11.6.#

Use multi-head attention to learn different groups of features for each token.

(Bonus) The Mind as an Emergent Phenomena#

There are a wide variety of understandings of what is meant by mind. The reductionist behaviorist tradition would argue that mind is an epiphenomenon of the activities of collections of neurons. They argue that minds do not in fact exist. At the opposite extreme, the idealist tradition going back to George Berkeley would argue that mind is all that exists, and matter is an epiphenomenon posited by minds for explanatory purposes. The Kantians would argue for the existence of both mind and matter, the latter being the ding an sich (thing in itself) that minds aspire to and cannot fully comprehend.

Our view is that all of the above is too simplistic. The universe, whatever its ultimate character, unfolds in a large number of emergences, all of which must he considered. The pruning rules of the emergences may go beyond the purely dynamic and exhibit a noetic character. It ultimately evolves into the mind, not as something that suddenly appears, but as a maturing character of an aging universe. This is something that we are just beginning to understand and, frustrating as it may be to admit such a degree of ignorance, we move ahead. That is our task as humans; some would call it knowing the mind of God and regard it as a vocation. ~ The Emergence of Everything, Harold J. Morowitz

27.4. Ethics#

Large Language Models are trained on large datasets of language!

Furthermore, instruct models are trained how to respond to queries.

Both of these steps have the potential to introduce bias into the model.