35. Bias and Ethics#

35.1. Pre-Reading#

Take at least one Project Implicit test

35.2. Types of Bias#

Recent USC publication funded by DARPA: A Survey on Bias and Fairness in Machine Learning.

35.3. Amazon Resume Tool#

From Reuters, Insight - Amazon scraps secret AI recruiting tool that showed bias against women.

SAN FRANCISCO (Reuters) - Amazon.com Inc’s machine-learning specialists uncovered a big problem: their new recruiting engine did not like women.

The team had been building computer programs since 2014 to review job applicants’ resumes with the aim of mechanizing the search for top talent, five people familiar with the effort told Reuters.

Automation has been key to Amazon’s e-commerce dominance, be it inside warehouses or driving pricing decisions. The company’s experimental hiring tool used artificial intelligence to give job candidates scores ranging from one to five stars - much like shoppers rate products on Amazon, some of the people said.

“Everyone wanted this holy grail,” one of the people said. “They literally wanted it to be an engine where I’m going to give you 100 resumes, it will spit out the top five, and we’ll hire those.”

But by 2015, the company realized its new system was not rating candidates for software developer jobs and other technical posts in a gender-neutral way.

That is because Amazon’s computer models were trained to vet applicants by observing patterns in resumes submitted to the company over a 10-year period. Most came from men, a reflection of male dominance across the tech industry. (For a graphic on gender breakdowns in tech, see: tmsnrt.rs/2OfPWoD)

In effect, Amazon’s system taught itself that male candidates were preferable. It penalized resumes that included the word “women’s,” as in “women’s chess club captain.” And it downgraded graduates of two all-women’s colleges, according to people familiar with the matter. They did not specify the names of the schools.

Amazon edited the programs to make them neutral to these particular terms. But that was no guarantee that the machines would not devise other ways of sorting candidates that could prove discriminatory, the people said.

The Seattle company ultimately disbanded the team by the start of last year because executives lost hope for the project, according to the people, who spoke on condition of anonymity. Amazon’s recruiters looked at the recommendations generated by the tool when searching for new hires, but never relied solely on those rankings, they said.

35.4. Explore Bias With What-If Tool#

Compare two binary classification models that predict whether a person earns more than $50k a year, based on their census information. Examine how different features affect each models’ prediction, in relation to each other. ~ UCI Census Income Dataset

Under Web dmoes select Compare income classification on UCI census data

In the dashboard click Performance & Fairness

Select

Over-50Kas the Ground Truth FeatureExperiment with slices and optimization strategies.

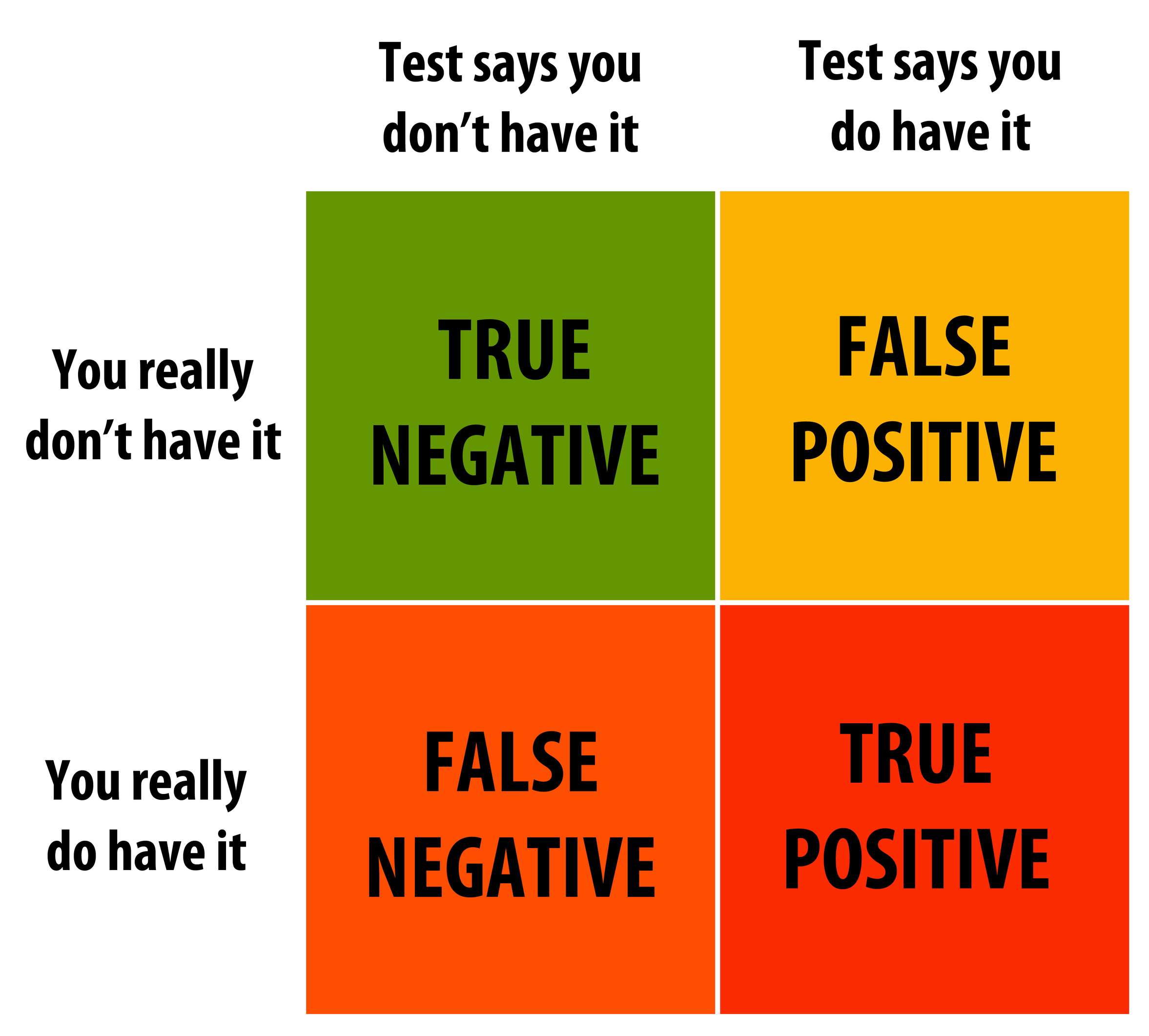

In particular, look at false positives and negatives

How is the Threshold adjusted?

What do these adjustments mean?